Overview – Physicalism

Physicalist theories of the mind argue that the universe is made of just one kind of thing: physical stuff.

According to physicalism, everything that exists – including the mind and mental states – is either a physical thing or supervenes on physical things. This means that two physically identical things must be mentally identical.

The opposing view to physicalism is dualism – the view that there are two kinds of things: physical things and mental things.

Physicalist theories all agree that the mind is a physical thing (or supervenes on physical things). But they disagree about what kind of physical thing mental states are. The syllabus looks at four physicalist explanations of what mental states are:

| Behaviourism | Type identity | Functionalism | Eliminativism | |

| Summary |

Mental states are behavioural dispositions | Mental states are brain states | Mental states are functions within a cognitive system | The folk psychology model of mental states is totally wrong |

| Example |

Pain is a disposition to say “ouch!” and rub the area that hurts | Pain is c-fibers firing in the brain | Pain is a unpleasant sensation that causes mental states such as a desire to get away from the pain source | The concept of pain should be eliminated in favour of a neuroscientific alternative |

| Arguments for | ||||

| Arguments against |

Behaviourism

Behaviourism says the meaning of words used to describe mental states – such as ‘pain’, ‘sad’, ‘happy’, ‘think’ etc. – is all about what is externally observable, i.e. behaviour and behavioural dispositions.

So, for a behaviourist, the meaning of ‘pain’ is to wince, say “ouch!”, try to get away from the source of the pain, have an elevated heart rate, and so on. Notions of private inner sensations (e.g. qualia) are irrelevant to what ‘pain’ means – it’s all about the external and observable (i.e. behavioural) manifestations.

Some definitions

Hard and soft behaviourism

The syllabus lists a distinction between two different types of behaviourism:

- Hard behaviourism (e.g. Carl Hempel): All propositions about mental states can be reduced without loss of meaning to propositions about behaviours and bodily states using the language of physics

- Soft behaviourism (e.g. Gilbert Ryle): Propositions about mental states are propositions about behavioural dispositions

In other words, hard behaviourism says you can give a complete account of the mind purely in terms of actual behaviours and bodily states. If you completely describe a person’s physical state and behaviours, you have described their mind – there’s nothing left over. In the language of philosophy, hard behaviourism says mental states analytically reduce to behaviours (and other externally observable physical facts).

In contrast, soft behaviourism says that propositions about mental states (e.g. “he is in pain”) are propositions about behavioural dispositions (see below).

Behavioural dispositions

Hard behaviourism says mental states reduce to behaviours. But an obvious problem for this is that you can have a mental state but not have the associated behaviour. For example, you can be in pain but stop yourself from saying “ouch!” – perhaps because you don’t want to look like a wimp. Similarly, you can pretend to be in pain (i.e. display the behaviour) when you’re not actually feeling anything, like when a player dives in football. This is why soft behaviourism analyses mental states in terms of behavioural dispositions, not just actual behaviours.

A disposition is how something will or is likely to behave in certain circumstances. For example, a wine glass has a disposition to break when dropped on a hard surface. The wine glass has this disposition even when it hasn’t been dropped and is in perfect condition because – hypothetically – if you dropped the glass, it would break.

Likewise, someone in the mental state of pain will have a disposition to say “ouch!” – even if they don’t actually do so in every instance. Or, hypothetically, if you were to ask someone who just stubbed their toe “did that hurt?”, they would answer “yes”. The person in pain has this disposition (to say “yes”) even if you never actually ask them the question, just like the glass has a disposition to break even if you never actually drop it.

Gilbert Ryle: The Concept of Mind

Note: Ryle is arguing for soft behaviourism.

Arguments against dualism

Ryle’s arguments for behaviourism start with some of the criticisms of dualism we looked at previously – such as the problem of other minds and the problem of causation. He then gives a new argument against dualism: that if dualism were true, mental concepts would be impossible to use.

Consider this: if dualism were true and mental states such as pain referred only to a private and non-physical mental state, how could we ever talk about them? I can’t literally show you what is going on in my mind when I am in pain. You can’t point to a mental state such as pain, you can only point to the behaviour.

Category mistake

Ryle argues that to think mental states are distinct from their associated behaviours (as dualism claims) is to make a category mistake – it confuses one type of concept with another. For example, to ask “how much does the number 7 weigh?” confuses the concept of number with the concept of things that have weight.

Ryle gives the following example to illustrate why dualism makes a similar such category mistake: Suppose someone were to visit Oxford to see the university. The visitor is shown the library, the lecture theatres, the teachers, and so on. After the tour is complete, he says: “but where is the university?”

Ryle gives the following example to illustrate why dualism makes a similar such category mistake: Suppose someone were to visit Oxford to see the university. The visitor is shown the library, the lecture theatres, the teachers, and so on. After the tour is complete, he says: “but where is the university?”

The visitor has made a category mistake in thinking that the university is something other than the things he’s been shown already. The visitor thinks the university is in the category of objects you can isolate and point to – that its one thing that can be isolated independently of all the stuff he’s been shown.

Ryle argues that dualists make the same sort of category mistake when talking about mental states.

Suppose an alien were to ask what the mental state of pain is. You show the alien people stubbing their toes, being tortured, wincing, saying “ouch!”, and so on. After showing the alien these examples of pain it asks: “but what is pain?”

In just the same way Oxford University is nothing more than the buildings, teachers, and so on, Ryle is arguing that the mental state of pain is nothing more than the various behavioural dispositions associated with pain. There is nothing you can show the alien over and above these behavioural dispositions – these behaviours and dispositions are what pain is.

Problems for Behaviourism

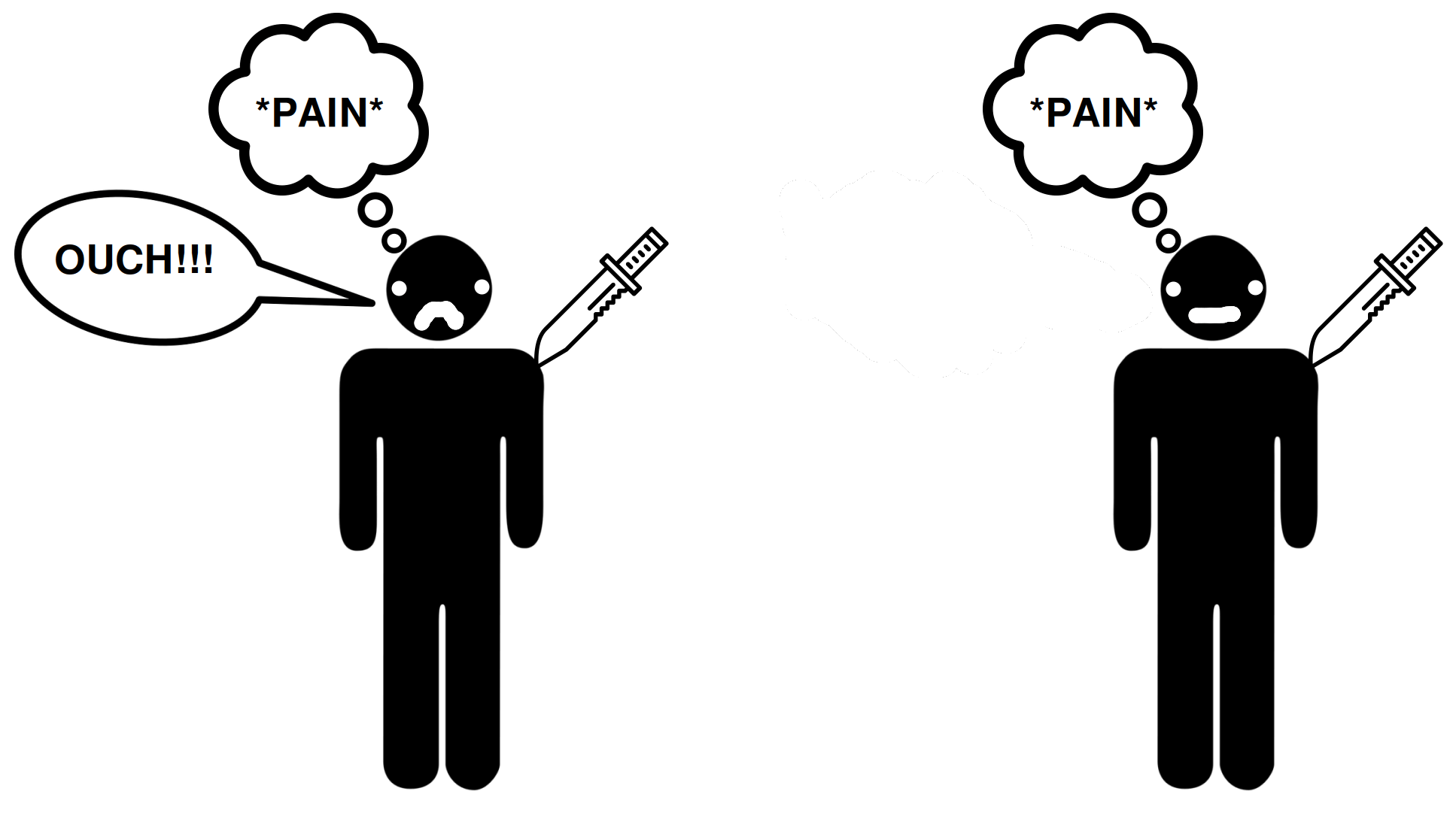

Asymmetry between self and other knowledge

When I stub my toe, I have direct access to the feeling of pain it produces. But if I see someone else in pain – however bad – it isn’t as direct. I don’t literally feel their pain (even if it does feel painful to watch).

Further, when I feel pain, there’s no way I could be mistaken as to what I’m feeling. However, if I see someone else scream “ouch!”, I might mistakenly believe they’re in pain when they’re only acting. When it comes to other people’s mental states, I can be mistaken.

It’s clear there’s a big difference between how you experience your own mental states and other people’s. But if behaviourism were true, this shouldn’t be the case.

Remember, according to behaviourism, mental states are behavioural dispositions. So to be in pain is to have certain behavioural dispositions.

It doesn’t feel like that though! If someone were to ask how I know I’m in pain it wouldn’t make sense to answer “because I winced” or “because I said ‘ouch!'” or give some other behavioural explanation. I just feel the pain and know I’m in pain from the unpleasant feeling.

But there’s no room for this ‘unpleasant feeling’ with behaviourism. Behaviourism analyses mental states solely in terms of behaviour.

So, in short, the argument looks like this:

- Behaviourism seems to rule out any asymmetry between self knowledge and knowledge of other people’s mental states

- There clearly is an asymmetry between self knowledge and knowledge of other people’s mental states

- Therefore, behaviourism is false

Possible response:

Ryle’s reply to this problem is to reject the asymmetry between self knowledge and knowledge of other people’s mental states. He argues that this apparent asymmetry is an illusion as a result of having far more evidence in the case of self-knowledge.

Super Spartans

Philosopher Hilary Putnam develops the asymmetry argument further with his example of ‘super Spartans’.

Super Spartans are an imagined community of people who completely suppress any outward demonstration of pain. They don’t wince, flinch, say “ouch!”, or anything like that. They have no dispositions toward pain behaviour – their heart rate doesn’t even increase.

Nevertheless, we can imagine the super Spartans do feel pain internally. They might not show it externally, but they would still experience a subjective experience of pain if they were tortured, say.

Remember, behaviourism says pain is a disposition to behave a certain way. But here we have an example of pain without the associated behavioural dispositions. So, the two things – pain and the behavioural dispositions – are two separate things. So, if super Spartans are possible, then behaviourism is false: it’s possible to have the mental state without the behavioural disposition.

Possible response:

The behaviourist could reply that without any sort of outward display it would be impossible to form the concept of pain. Without the concept of pain it impossible to distinguish which behaviour they were supposed to be suppressing in the first place. So Putnam’s example is incoherent.

Zombies

The zombie argument for property dualism can also be used to argue against behaviourism.

A zombie is basically the exact opposite of a super Spartan: where the Spartan has qualia but not behaviour, the zombie has behaviour but no qualia. It might say “ouch!” when it gets stabbed but it doesn’t feel any pain internally.

If zombies are possible, then it’s possible to have the behavioural dispositions associated with pain without actually being in pain. Therefore, the behavioural disposition of pain is separate from the feeling of pain. Therefore, behaviourism is false.

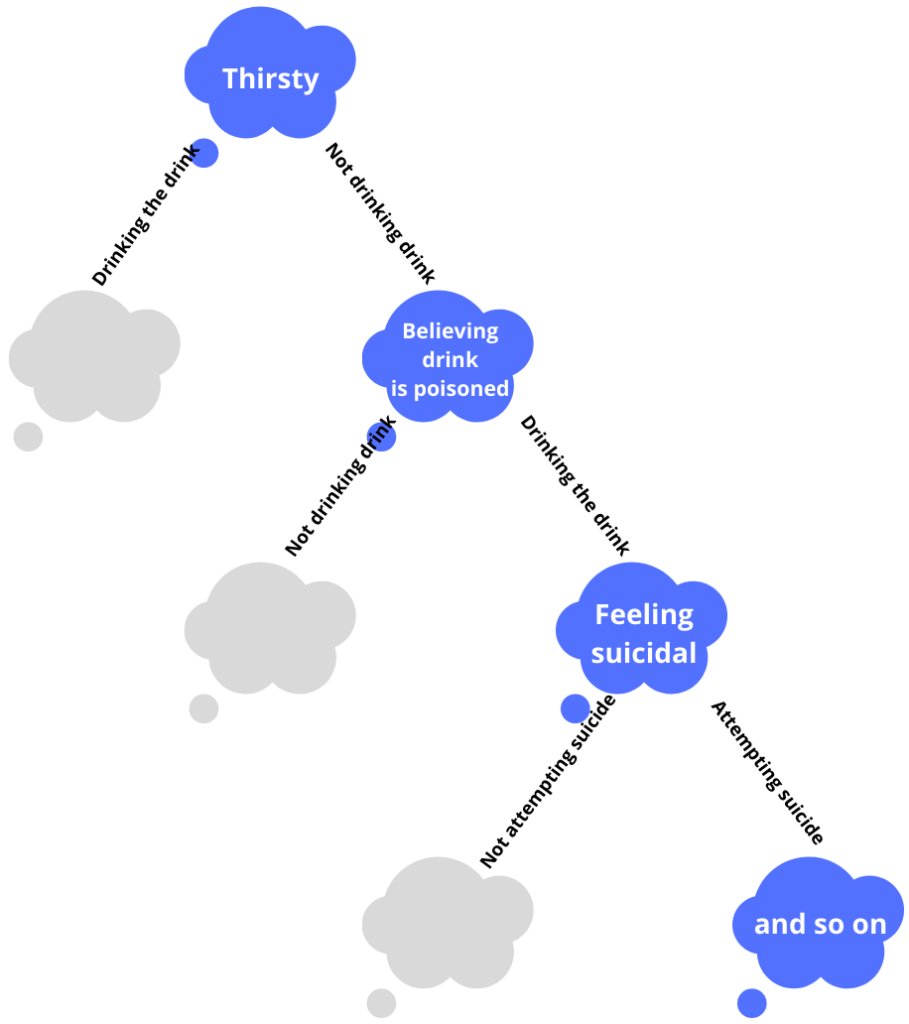

Multiple realisability

The same mental state can be realised through multiple different behaviours depending on a person’s other mental state. And these other mental states also need to be analysed in terms of behaviours, which again might vary depending on a person’s other mental states. This can go on forever.

For example, let’s say you and I could both have the mental state of being thirsty.

This mental state of being thirsty would probably cause you to behave by drinking a drink if it was in front of you. But I might not drink the drink – despite having the same mental state of being thirsty – if I also have the mental state of believing that the drink is poisoned.

So, in order to explain why the mental state of me being thirsty leads to this particular behavioural disposition (i.e. not drinking), we need to appeal to another mental state. But this additional mental state also needs to be analysed in terms of behaviour.

The mental state of believing that a drink is poisoned would probably cause you to behave by avoiding the drink or pouring it away. But someone else might drink the drink – despite also believing that the drink is poisoned – if they also have the mental state of being suicidal.

And the mental state of being suicidal can also be realised in multiple different behaviours depending on a person’s other mental states, and so on and so on.

In short, it seems impossible for behaviourism to explain mental state as behaviours without assuming various other mental states. But these other mental states need a behavioural explanation too!

Circularity

We can further press the multiple realisability objection above to argue that this process of analysing mental states in terms of behaviour is circular. Behaviourism must assume other mental states in order to give an analysis of mental states in terms of behavioural dispositions.

And further, If you try to define those other mental states in terms of behavioural dispositions, you will end up back where it started.

Overly simplified example:

- To be in pain is to be disposed to say “ouch”

- But you won’t say “ouch” if you have a belief that the people you are with will think you are a wimp

- To have a belief that the people you are with will think you are a wimp is to be disposed to hide when you are in pain

Type identity

“All mental states are identical to brain states (ontological reduction) although ‘mental state’ and ‘brain state’ are not synonymous (so not an analytic reduction)”

Type identity theory is perhaps the most obvious physicalist theory of mind. It says that mental states reduce to brain states. Put simply, mental states are brain states.

An example often used in philosophy of mind to illustrate brain states is c-fibres. To say someone’s c-fibres are firing is just technical shorthand for the brain state associated with pain. And so, a type identity theorist would say that pain is identical to c-fibres firing, in the same way that lightning is identical to electrical discharge.

JJC Smart: Sensations and Brain Processes

Ontological reduction / contingent identity

JJC Smart claims that mental states and brain states are contingently identical. Another way of saying this is that mental states ontologically reduce to brain states (which is how the A level philosophy syllabus defines type identity theory).

Other examples of ontological reductions/contingently identical things are:

- Lightning is electrical discharge

- Water is H2O

- This table is an old suitcase

These relationships are not merely correlations. It’s not like electrical discharge happens at the same time as lightning, or that the electrical discharge causes the lightning – the lightning and the electrical discharge are the same thing.

According to type identity theory, it’s the same story with pain and c-fibres – they just are the same thing.

However, ontological reductions like this are not analytic reductions, such as “a bachelor is an unmarried man”. “A bachelor is an unmarried man” is an analytic reduction because the opposite idea (i.e. a married bachelor) is a contradiction. In contrast, even though “lightning is electrical discharge” is true, there is no logical contradiction in saying “lightning is not electrical discharge”.

So, according to type identity theory, ‘pain’ ontologically reduces to ‘c-fibres firing’, but it doesn’t analytically reduce to it.

Ockham’s razor

Note: Ockham’s Razor can be used as an argument against dualism and in favour of physicalism more generally. It’s not just an argument for type identity theory.

Ockham’s razor is a scientific/philosophical principle which says something like:

“Do not multiply entities beyond necessity”

A more colloquial formulation would be something like “the simplest explanation is the best”.

In practice what this means is that, if two theories make the same prediction, the theory that posits the fewest number of entities is likely to be the more accurate theory.

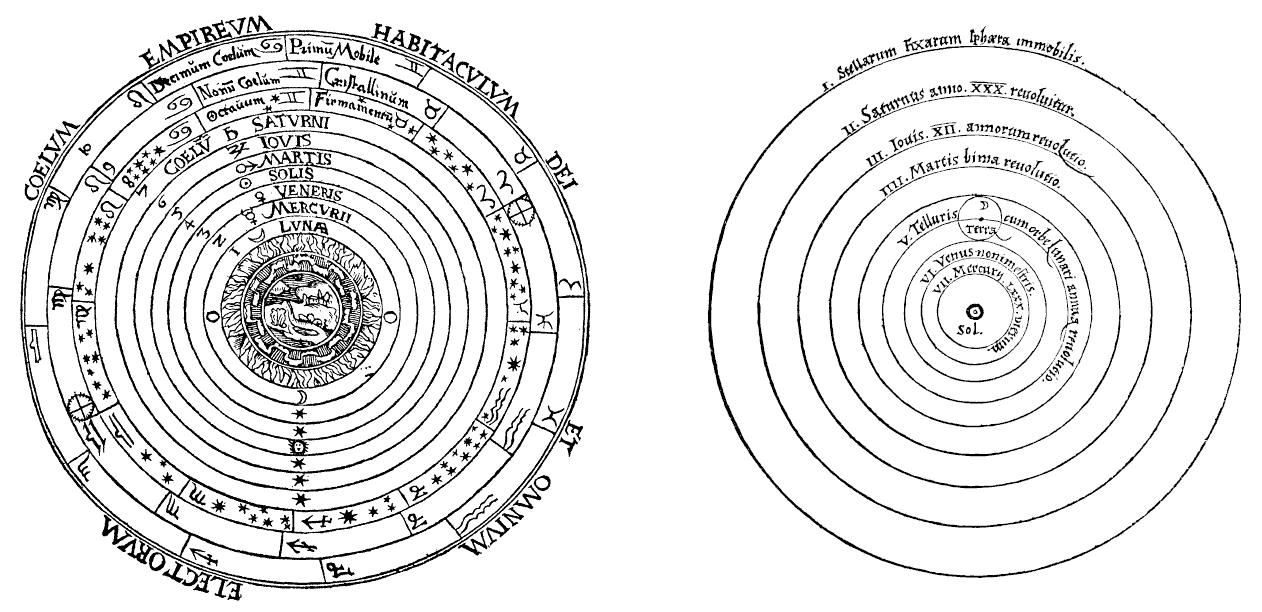

For example, back before it was widely accepted that the earth revolves around the sun, astronomers had to posit all these crazy forces and reasons to explain why planets and stars appeared in the sky when they did. This geocentric theory could make pretty accurate predictions. But the heliocentric theory made the same predictions using far fewer entities:

So, Ockham’s razor (correctly) suggests that the Earth revolves around the sun and not the other way round.

JJC Smart has a similar idea in mind when arguing against dualism: He argues that type identity theory can predict everything that dualism can, but type identity theory does so with one entity (the brain) rather than two (mind and brain).

If there are no overwhelming arguments or proof of dualism, Smart argues, we shouldn’t posit extra entities to explain the mind. We can explain just as much about mental states by referring to the brain as we can by referring to a non-physical mind. For example, when I feel pain, brain scans show that my c-fibres get activated. And when my c-fibres get activated, I feel pain. This suggests they are the same thing. We don’t need to posit an additional substance here.

So, where a dualist would say the c-fibres and the pain are two separate substances, type identity theory says they are the same physical thing (but different concepts).

Problems

Location Problem

If my c-fibres are firing, it’s presumably pretty easy to locate where this is happening. You could put me in an MRI scanner, for example, and find out the exact location of the c-fibres firing.

But my pain doesn’t seem to have the same physical location. It seems like it’s somewhere else. If you locate my c-fibres it doesn’t seem like you’ve located my subjective mental sensation of pain.

So the argument is something like this:

- If pain and c-fibres firing are identical then they must share all the same properties

- C-fibres have a precise physical location

- Pain does not have a precise physical location

- Therefore, pain and c-fibres firing are not identical

Zombies

The zombie argument used against behaviourism can also be used against type identity theory.

Remember, type identity theory says pain is identical to c-fibres firing. But we can imagine a zombie with the brain state (c-fibres firing) but not the mental state (pain).

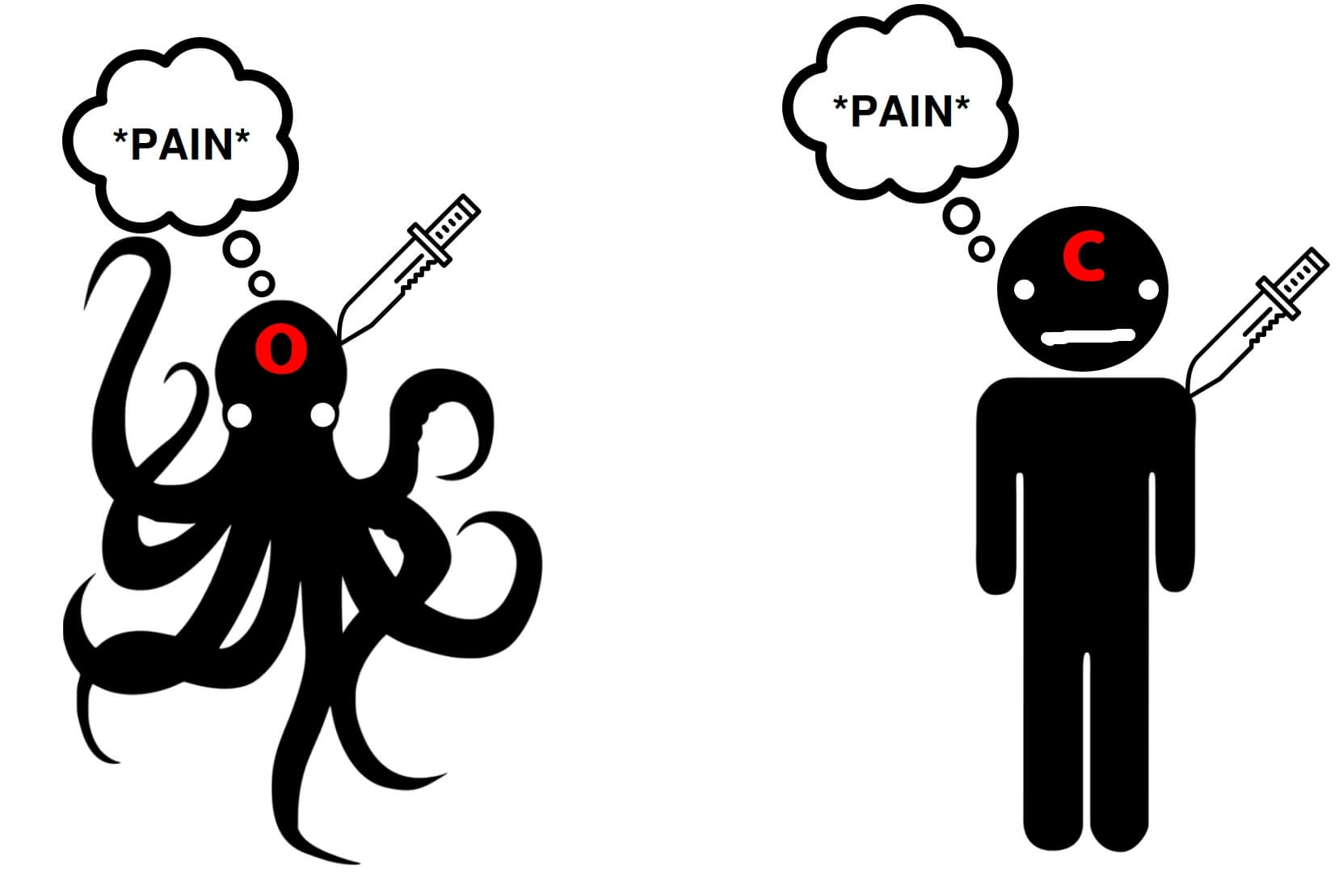

Multiple Realisability

Hilary Putnam argues that mental states, like pain, cannot be reduced to brain states, such as c-fibres firing, because mental states are multiply realisable. What this means is that the same mental state can come from many different brain states.

For example, an octopus has a very different brain setup to a human. Let’s say, for example, that octopuses have o-fibres instead of c-fibres.

If you stabbed an octopus and it writhed about, surely you would say that it’s because it’s in pain. But if type identity theory is true, this isn’t possible.

So the argument is something like this:

- If type identity theory is true, you cannot have the same mental state without having the same brain state

- An octopus and a human do not have the same brains or brain states

- But an octopus and a human can both experience the mental state of pain

- Therefore, type identity theory is false

An example that Putnam uses is silicon-based aliens. If type identity is true, then we can’t both share the belief “grass is green” because my brain is made from carbon and its brain is made from silicon. But this just seems wrong. We both share the same belief despite our differing physiology.

Functionalism

“All mental state can be characterised in terms of functional roles which can be multiply realised.”

Functionalism defines mental states as functional states within an organism.

For example, we might say the functional role of pain is an unpleasant sensation that causes the organism to get away from the thing that’s causing it harm. That function is what mental states, such as pain, are.

Functions should be understood within the context of the entire mind. So, the function of pain, for example, isn’t simply to cause behavioural dispositions (as behaviourism claims). Part of the function of pain is to cause other mental states – such as a belief that you are in pain, or a desire for the pain to stop.

Hilary Putnam: The Nature of Mental States

Arguments against type identity

Putnam makes the multiple realisability argument above as an objection to type identity theory, but this argument also supports functionalism.

This isn’t Putnam’s example, but think about what a knife is. A knife can be made from metal, or plastic, or wood – as long as it performs its function (to cut things). Similarly, Putnam would say that mental states such as pain can be experienced by a human, an octopus or an alien – the key feature of pain is its function.

We might say, for example, that the function of pain is an unpleasant sensation to cause a desire for the pain to stop and that causes the organism to get away from the thing that’s causing it harm. This function is not specific to any physical instantiation, and so anything that serves this function – whether in a human, an octopus, an alien, or even a sufficiently complex computer – would count as pain. Functionalism thus avoids the multiple realisability objection to type identity theory.

Note: In theory, even some non-physical thing could serve the function of pain, and so functionalism is compatible with substance dualism. However, most functionalists are physicalists.

Arguments against behaviourism

Putnam makes the circularity argument against behaviourism above, but again it can be used as an argument to support functionalism.

Behaviourism is restricted to analysing mental states solely in terms of behavioural dispositions, but this causes problems when we realise that the same mental state can be realised by pretty much any behaviour (given other mental states). For example, being in pain may be analysed as a disposition to say “ouch!” – but this analysis doesn’t work when you also have the mental state of not wanting to look like a wimp. Reducing mental states to behavioural dispositions is either too simplistic or becomes circular when you bring in other mental states that also need to be analysed in terms of behaviours.

Functionalism avoids this problem because the function of mental states is more than simply behaviours – mental states can also cause other mental states. My mental state of pain, say, could serve the function of causing other mental states such as a belief that I am in pain and a desire for the pain to stop. My mental state of pain serves this function even if that mental state ends up having no effect on my behaviour. Functionalism thus avoids tying mental states to specific behaviours, and thus doesn’t end up having to give circular explanations of how a person can be in pain when their behaviour says otherwise, as behaviourism does.

Functionalism looks at the function of mental states within the mind as a whole, rather than its function in terms of specific behaviours:

“Since the behavior of the Machine (in this case, an organism) will depend not merely on the sensory inputs, but also on the Total State (i.e., on other values, beliefs, etc.), it seems hopeless to make any general statement about how an organism in such a condition must behave [i.e. like behaviourism does]”

Problems

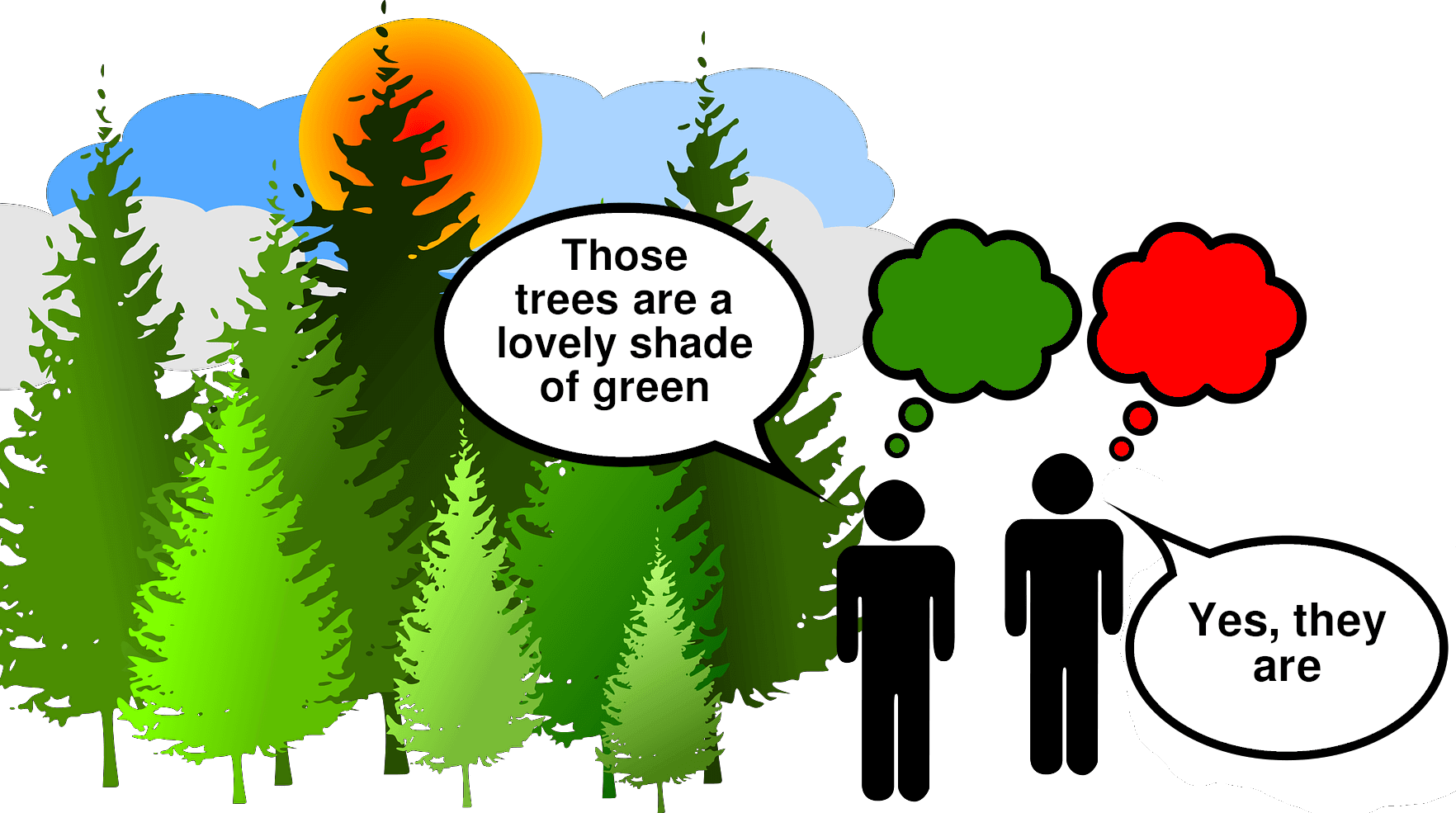

Rules out inverted spectrum

What if my experience of green was like your experience of blue and vice versa?

For example, if my qualia when I look at the sea are similar to your qualia when you look at grass.

When we both look at the sea, our mental states would be functionally identical. They would both, for example, cause us to believe “the sky is blue”.

And since our mental states are functionally identical, functionalism must say they are the same mental state. But they’re clearly not the same. My qualia are different from yours.

So the argument is something like:

- If functionalism is true, then two functionally identical mental states are the same mental state

- My mental state when I look at the sky is functionally identical to yours but phenomenally different

- Therefore our two mental states are not the same mental state

- Therefore functionalism is false

The China Brain

Ned Block’s China Brain thought experiment describes a setup that is functionally identical to a mind but is clearly not the same thing.

These are the key points:

- Imagine we have a complete functional description of human mental states

- A human body is hooked up to the entire population of China

- Every person in China is linked to other people (neurons) via two-way radios

- They communicate according to the rules set out in the complete functional description of human mental states described earlier

- Some of these people (neurons) are linked to the outputs of the body

- Imagine the Chinese population recreated the functions of the neurons

- So, the input leads to exactly the same output, and everything in between is functionally identical

Basically, the scenario above is designed to replicate a human brain. The population of China is roughly equal to the number of neurons in a brain and the two-way radios replicate the firings of the neurons.

According to functionalism, the China brain would actually be in pain, say, given the appropriate inputs (like being stabbed). But this is obviously false.

Just because the example of the China brain is functionally identical to human pain, doesn’t mean the China brain really is in pain. So functionalism is false. There’s clearly more to mental states than their function.

The Knowledge Argument

A version of the knowledge argument for property dualism can be used to criticise functionalism.

In the original argument, it is argued that all the physical facts would not be enough for Mary to know what it’s like to see red if she’d never seen it for herself. We can adapt this argument to say that all the physical and functional facts would not be enough for her to know what it’s like to see red either. In other words:

- Mary knows all the physical and functional facts about the mental state of seeing red

- But when she leaves the black and white room for the first time and sees red she learns something new about the mental state of seeing red

- So, there is more to the mental state of seeing red than simply the physical and functional facts

- So, functionalism is wrong – there is more to mental states than their function

Eliminative materialism

“Some or all common sense (‘folk psychological’) mental states/properties do not exist and our common-sense understanding is radically mistaken.”

Eliminative materialism rejects many of the assumed concepts common to all the other theories we’ve discussed (including dualism).

For example, many of the examples here used the concept of pain, assuming we both had a common understanding of what this refers to. But eliminative materialism doesn’t believe in using these concepts – or similar ones like belief, fear, happy, thought, etc.

Whereas other theories have tried to define these concepts – by their function, or physical instantiation, or whatever – eliminative materialism wants to eliminate them.

Eliminative materialism argues that terms like ‘belief’ and ‘pain’ don’t correspond to anything specific. They might be a useful and practical way of talking about mental states but when we actually look at what they really are, they can’t be reduced to anything in particular.

Eliminative materialists think that a proper analysis of mental states will look more like neuroscience, with specific descriptions of the mechanics of the brain.

Paul Churchland: Eliminative Materialism and the Propositional Attitudes

Folk psychology

Folk psychology refers to the everyday psychological concepts and explanations of behaviour we use. For example:

- He ran away because he was scared

- She got a drink because she was thirsty

- They ran out of the building because there was a panic

- He studied for the test because he wanted to get a good grade

Folk psychology is such an integral part of our language that it might be hard to even see what it is. It’s basically just the common sense way we talk about mental states.

The theories we’ve looked at so far seek to define folk psychology terms in various ways. For example, type identity theory reduces the folk psychology concept of pain to c-fibres. And functionalism reduces it to something different – a functional concept.

But both theories implicitly agree that pain is something that can be reduced. They just disagree about what it should be reduced to.

Eliminative materialism rejects the assumption that such folk psychology concepts refer to anything at all. So any reductionist account of mental states – such as type identity theory or functionalism – is doomed to fail. Instead, folk psychology concepts should be eliminated.

Folk psychology as a scientific theory

Folk psychology is something we just assume. But Churchland sees it as a scientific theory like any other. And the nature of scientific progress requires bad theories to be replaced by better ones.

Scientific theories have laws and rules. These laws can be used to make predictions. For example, gravity can predict where Mars will be in the sky on 17th August 2027.

Folk psychology has its own set of laws and rules – but they’re loosely defined. These laws have reasonable predictive power but they’re not perfect. You can often predict how someone will act using folk psychology (e.g. when people get angry they shout and stomp about) – but not always.

There are other problems with folk psychology as a theory. For example, folk psychology can’t explain mental illness, sleep or learning.

And while pretty much every other scientific theory has advanced over time (e.g. Aristotle’s physics was replaced by Newton’s and then Einstein’s), folk psychology is still the same as it was thousands of years ago.

Finally, the intentionality in folk psychology doesn’t fit well with most other areas of science. We talk of having a thought about something, for example “I am thinking about an elephant”, but it’s not clear how a physical thing can be about anything in this way. A table or a chair doesn’t have intentionality in this way – it isn’t about anything. Nor do most other theories in science have intentionality – we don’t say gravity or temperature are about anything, for example.

Churchland takes all this to suggest folk psychology may not be the most accurate way to think about the mind.

Folk psychology vs. neuroscience

Given the problems with folk psychology described above, Churchland argues we should look to replace it with a more rigorous scientific theory such as neuroscience.

While it may be useful to use folk psychology as shorthand, we shouldn’t take it to be literally true. Engineers often use Newton’s equations to make their calculations because it’s quicker than using Einstein’s and the difference in outcome is so small as to make no difference. But even though Newton’s equations make accurate predictions in this way, they’re not technically accurate.

It’s a similar story with folk psychology vs. neuroscience. Churchland isn’t saying ordinary people should stop using words like ‘belief’ and ‘pain’. However, he is saying that when we’re doing science or philosophy of mind we shouldn’t use folk psychology terms because they’re not technically accurate. We should look to eliminate them in favour of the correct explanations.

Problems

Direct certainty of folk psychology

In rejecting folk psychology, eliminative materialism goes against many intuitions we have.

For example, Descartes took ‘I think’ to be his very first certainty. We could argue that the direct certainty we have about our own mental states should take priority over physicalist considerations.

Possible response:

However, this response misunderstands eliminative materialism. Churchland is not denying the existence of the mental phenomena we refer to as ‘beliefs’, ‘pain’, ‘thought’, etc., he’s just saying this folk psychology isn’t the technically correct theory as to their nature.

Folk psychology has good predictive power

Churchland criticises folk psychology as a scientific theory for its explanatory and predictive failures. But we can respond that folk psychology does explain and make fairly accurate predictions about how people behave, such as the following:

- When he feels nervous he talks really fast

- If she has a belief that eating animals is wrong, she won’t order the chicken

- He shouted and stomped about because he was angry

- If she wins the lottery she will be happy and jump about cheering

- When he is in pain he swears loudly

In contrast, neuroscience is pretty bad at predicting behaviour – at least at present. The brain is a highly complex structure and this makes it incredibly difficult for neuroscience to model and predict even the simple behaviours in the bullet points above. It’s doubtful whether a team of the best neuroscientists in the world, using the most advanced equipment available today, could more accurately predict a typical human’s behaviour than folk psychology.

Self-refuting

If Churchland is arguing that we should believe eliminative materialism is true, then it seems his theory is self-refuting.

Eliminative materialism claims that beliefs don’t exist – they’re a mistaken folk psychology concept. But, in arguing for eliminative materialism, Churchland is expressing his belief in the truth of this theory. After all, why would anybody argue that something is true if they didn’t believe it was true? Arguments are expressions of belief and so, if Churchland believes that eliminative materialism is true, then this disproves his own theory: Churchland has proved that beliefs exist.

However, this response clearly commits the fallacy of begging the question. It assumes the very thing it’s trying to prove: that beliefs exist. Churchland could just reply that what his opponent is calling a belief is actually something else (some neuroscience explanation).

But we can push this objection further. Eliminative materialism criticies folk psychology for talking about intentional content (i.e. how thoughts can be about something) but offers no neuroscientific alternative. We may be able to eliminate beliefs, but eliminating intentionality is seemingly impossible. To even make sense of statements like “eliminative materialism is true” or “folk psychology is false” or “this is a more accurate scientific theory” we must presuppose intentionality – we must understand what these statements are about.

So, the argument that eliminative materialism is self-refuting reemerges: to even be able to talk about eliminative materialism requires intentionality, which is a folk psychology concept. It’s not clear how neuroscience could ever offer an alternative account of intentionality, and so folk psychology cannot ever be fully eliminated.